Decision Tree Analysis in R

When youve built your R skills youll be able to analyze complex data build interactive web apps and create machine learning models. Web page link.

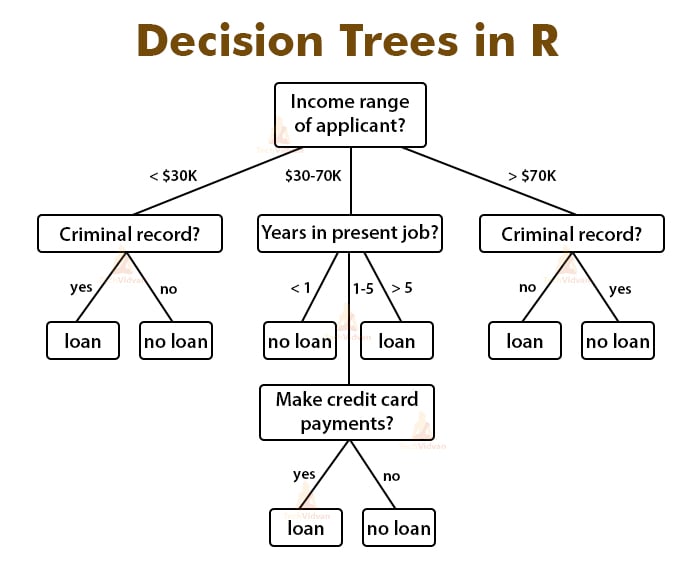

Decision Tree Classifier Implementation In R Decision Tree Tree Decisions

Perform Linear Regression Analysis in R Programming - lm Function.

. Introduction to Decision Tree. Random Forest Approach for Regression in R. Easy to identify important variables.

Weight is the weight of the fruit in grams. We can create a decision tree by hand or we can create it with a graphics program or some specialized software. DTs give a balanced accuracy of 81 and even better with SVM.

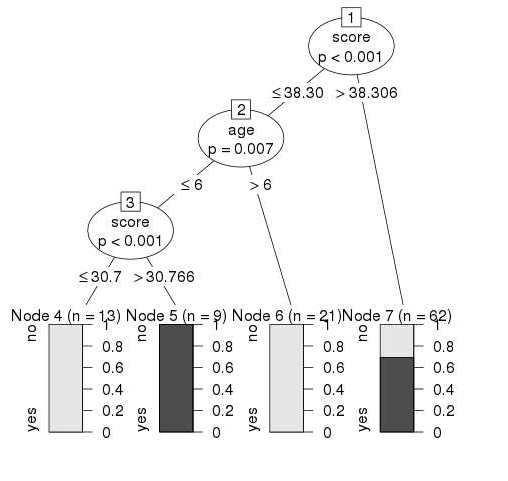

You understand how a decision tree either by itself or in a tree based ensemble decides on the best order of features to split on and decides when to stop when it trains itself on given data. R is one of the most commonly used programming languages in data mining and offers great packages and resources for data analysis visualization and data science. Known for its precise classification accuracy and its ability to work well to a boosted decision tree and small datasets with too much noise.

In general decision trees are constructed via an algorithmic approach that identifies ways to split a data set based on different conditions. I initially tried using the data mining functionality in SQL Server however following your advice I switched to using R. Train another regression tree but set the maximum number of splits at 7 which is about half the mean number of splits from the default regression tree.

Decision Trees in R. Now lets use the loaded dummy dataset to train a decision tree classifier. Used for generating a decision tree with just a single split hence also known as a one-level decision treeIt is known for its low predictive performance in most cases due to its simplicity.

Decision Tree in R is a machine-learning algorithm that can be a classification or regression tree analysis. Making prediction is fast. Suppose that you want a regression tree that is not as complex deep as the ones trained using the default number of splits.

A decision tree is the same as other trees structure in data structures like BST binary tree and AVL tree. The empty pandas dataframe created for creating the fruit data set. It is a tree-structured classifier where internal nodes represent the features of a dataset branches represent the decision rules and each leaf node represents the.

Cross-validate the model using 10-fold cross-validation. Nutrient Requirement of GalaM26 Apple tree for high yield and quality. A decision tree is a decision support tool that uses a tree-like model of decisions and their possible consequences including chance event outcomes resource costs and utilityIt is one way to display an algorithm that only contains conditional control statements.

Decision Tree Classification Algorithm. Tree fitctreeTblResponseVarName returns a fitted binary classification decision tree based on the input variables also known as predictors features or attributes contained in the table Tbl and output response or labels contained in TblResponseVarNameThe returned binary tree splits branching nodes based on the values of a column of Tbl. Recursive portioning- basis can achieve maximum homogeneity within the new partition.

Its Time to Evaluate the Nutritional Status of Fruit Trees. One of the drawbacks is to can have high variability in performance. Decision trees are commonly used in operations research specifically in decision analysis to help identify a.

WSU Tree Fruit Extension Specialist Prosser-IAREC bsallatowsuedu. Types of Decision Trees. If you every have to explain the intricacies of how decision trees work to someone hopefully you wont do too bad.

That has been really helpful. Polynomial Regression in R Programming. Advantageous of Decision Trees.

Discriminant Analysis in R. Decision Tree is a Supervised learning technique that can be used for both classification and Regression problems but mostly it is preferred for solving Classification problems. The decision tree can be represented by graphical representation as a tree with leaves and branches structure.

Algorithm for Decision Tree Induction Basic algorithm a greedy algorithm Tree is constructed in a top-down recursive divide-and-conquer manner At start all the training examples are at the root Attributes are categorical continuous-valued they are g if y discretized in advance Examples are partitioned recursively based on selected attributes Test attributes are. Decision trees can be constructed by an algorithmic approach that can split the dataset in different ways based on different conditions. Smooth is the smoothness of the fruit in the range of 1 to 10.

Decision Tree Analysis is a general predictive modelling tool that has applications spanning a number of different areas. Decision tree is a type of algorithm in machine learning that uses decisions as the features to represent the result in the form of a tree-like structure. The target having two unique values 1 for apple and 0 for orange.

The leaves are generally the data points and branches are the condition to make decisions for the class of data set. In simple words decision trees can be useful when there is a group discussion for focusing to make a decision. In general Decision tree analysis is a predictive modelling tool that can be applied across many areas.

Using the NumPy created arrays for target weight smooth. I used the SMOTE algorithm to rebalance the data set and tried using both decision trees and SVM.

R Classification Algorithms Applications And Examples Techvidvan

No comments for "Decision Tree Analysis in R"

Post a Comment